FRIDAY, 27 APRIL 2012

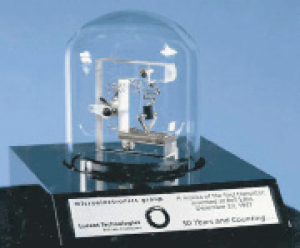

Television, radio, internet, smartphones, laptops, MP3 players, DVD players—could you imagine your life without these things now? While we increasingly take them for granted, all of these inventions owe their existence to what is arguably the most important technological breakthrough in the past 50 years: the development of microelectronics.This global revolution has its origins in a single milestone: the invention of the transistor by three physicists at Bell Telephone Laboratories, USA, on 23rd December 1947. John Bardeen, Walter Brattain and William Shockley became known as ‘the transistor three’. Their invention has been so important for the evolution of human communication that historians compare it to the development of written alphabets in 2000 BC and the printing press in the mid-15th century. The transistor marked the birth of the ‘Information Age’, permitting inventions that would change forever the way in which people communicate.

But what are transistors, and why are they so important? When Bardeen, Brattain and Shockley were working at Bell Labs in 1945 under the direction of Mervin J Kelly, mechanical switches were used to operate telephone relays. However, these switches were notoriously slow and unreliable, and Kelly quickly realised that an electronic switch could have enormous commercial potential. He told his employees simply, “Replace the relays out of telephone exchanges and make the connections electronically. I do not care how you do it, but find me an answer and do it fast”. After two years of intensive research, the transistor—a solid-state on/off switch controlled by means of electrical signals—was born. Transistors had the additional advantage that they could be used to amplify electrical signals over a range of frequencies, making them perfect for long-distance TV and radio transmission.

Bell Labs’ transistor easily met the three criteria required for phenomenal success in the technology world: it outperformed its competitors, it was more reliable and it was cheaper. The potential for technological revolution was foreseen by Fortune magazine, which declared 1953 “the year of the transistor”. Bardeen, Shockley and Brattain’s achievement even earned them the Nobel Prize in Physics in 1956.

A key limitation, however, was that transistors were made and packaged one at a time and then assembled individually into circuits. As the number of transistors per circuit increased from a few units to dozens of them, the complexity of wiring required to connect the transistors increased exponentially—a problem known as ‘the tyranny of numbers’. The solution to this problem, and the greatest leap forward in the microelectronics revolution, came only three years later, courtesy of Jack Kilby, a physicist working at Texas Instruments, and Robert Noyce, from Fairchild Semiconductors. Working entirely independently, both men managed to design and manufacture an integrated circuit (IC) from a piece of silicon wafer. The IC comprised a complete circuit with transistors as well as additional elements such as resistors and capacitors contained within a single silicon chip. While both companies filed for patents for the invention they eventually decided, after several years of legal dispute, to cross-license the technology. Such was the importance of the IC that, in 2000, Kilby received the Nobel Prize in Physics.

But the key to the transistor’s enormous success and ubiquity is arguably its compatibility with digital technology. The language of computing is binary code—a stream of ‘1’s and ‘0’s. This is perfectly suited to transistors, which can encode ‘0’ as ‘off’ and ‘1’ as ‘on’. This creates streams of digital bits that can flow between electronic systems. The ‘Age of Information’ speaks binary.

The final boost for the microelectronics industry and the integrated circuit market came unexpectedly (and inadvertently) from John F Kennedy in 1961, when he spoke of his vision to put a man on the Moon. ICs were compact, lightweight and cheap to manufacture, making them ideal components for the computers onboard NASA’s spacecraft. The race to build ever more powerful ICs was on. A full IC contained 10 components in 1963, 24 in 1964 and 50 by 1965. It was the former CEO of Intel, Gordon Moore, who first noted this trend and summarised it in a simple statement that would later become known as Moore´s Law: “The complexity for minimum component cost has increased at a rate of roughly a factor of two per year…certainly this rate is expected to continue.” However, it was not until 1971 that Intel launched its first commercially available microprocessor IC, the Intel 4004, a device containing 2,500 transistors within a single silicon chip. Accepting digital data as an input, the microprocessor would transform it according to instructions stored in the memory and provide the result as a digital output.

The first destination of Intel’s 4-bit microprocessor was the central processing unit (CPU) of a high-standard desktop calculator for the Japanese manufacturer Busicom. The success of this first implementation did not pass unnoticed. Soon a number of companies, such as Motorola, Zilog and MOS Technology, were investing in microprocessor ICs. In 1974 the intense competition for market share led to the development of the more advanced 8-bit processors, as well as a substantial reduction in price from hundreds to just a few dollars.

These advanced modules found applications in embedded systems, such as engine control modules for reducing exhaust emissions, but were mainly used in microcomputers. It was a perfect time for ambitious electronic amateurs. Perhaps the most notable were Steve Wozniak and Steve Jobs, who in 1976 gathered 62 individual ICs to assemble the first practical personal computer, the Apple I, which sold for $666.66, almost three times the manufacturing cost.

After the enormous success of Apple and Commodore computers, IBM launched the IBM PC in 1981. This was the origin of the ubiquitous PC, as IBM’s design was free for anyone to copy. Rapidly, companies such as Intel, AMD, Toshiba, Texas Instruments and Samsung came to provide CPUs with an increasing number of transistors, not only for PCs but also for the multitude of devices and gadgets that fill our homes and lives.

In 1947, only a single transistor existed in the world. It was bulky, slow and expensive. Now there are more than 10 million billion and counting. Nearly 1.5 billion transistors can fit into a single 1 cm2 piece of silicon crystal, capable of performing almost 5 billion operations per second. Never before in human history has any industry, at any time, come close to matching the growth rate enjoyed by the microelectronics industry.

M Fernando Gonzalez is a 4th year PhD student in the Department of Physics