WEDNESDAY, 14 MAY 2014

We normally assume that our eyes work in much the same way as a video camera, and as far as light works, that’s true. Light enters through the pupil and is focused on a range of specific light- sensing cells in the retina. However there are several evolutionary quirks in the retina’s layout. That means that making an image out of the light that hits it requires a lot of guesswork from the brain.For instance, we generally think that our eyes send our brain continuous images of the world in front of us, however much of our vision is based on assuming that things stay the same unless there’s evidence to the contrary. One type of light- sensing cells, known as rod cells, are responsible for most of our peripheral vision. These cells only notify our brain when there are significant changes in the light intensity they receive. The other type of light sensitive cells, called cone cells, are responsible for our central vision and can quickly lose sensitivity to a continuous signal. We’re familiar with the after-effects of looking at a high-contrast picture or a bright light being temporarily ‘burned’ into our retinas, but this happens to some degree with all images. To keep our sight sharp, the eye has to keep changing precisely where it looks, a motion that goes on subconsciously even when staring at something.

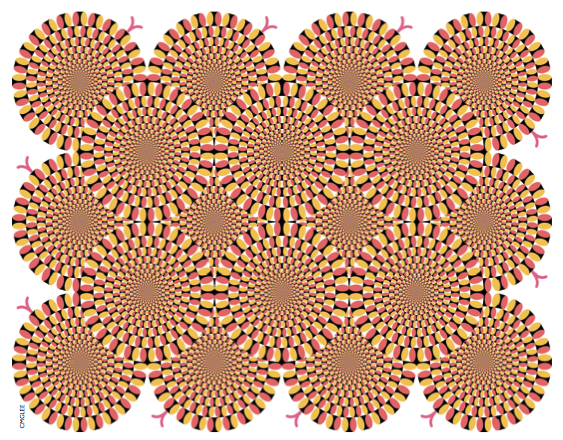

These factors are thought to be behind the apparent motion of several optical illusions; slight movements of the eye will make the after-effect image overlap with different parts of the current image. When the two are superimposed, you get a complicated resultant image where some parts have overlapping similarities and other parts have overlapping differences. If one pattern moves relative to the other even a little, these areas of overlap change, giving the appearance of motion.

The slight shifting of focus by the subconscious when looking directly at something is known as ‘fixation’, but the subconscious controls several other ways of moving the eyes too. The eyes can continuously track a slow-moving object, but can’t move smoothly unless there is something for them to follow. If there isn’t anything to follow, or if the eyes need to move faster, they make sudden jumps known as ‘saccades’. You can see this by looking at your finger as it makes a circle in front of you and trying to follow an imaginary circle. During a saccade, the eye moves as fast as it can, but once it has started, it can’t be stopped. Although saccades can be started consciously, they are controlled subconsciously. During a saccade, there is no ‘seeing’ done–the images change too fast, and the brain only processes the signals coming from the eye to see if they have settled down yet.

This temporary blindness is why you can’t see our own eyes move when looking in a mirror. It’s also the explanation behind the stopped-clock illusion. When you look at a clock, the time before the first second passes often seems longer than the time between other seconds. This is impossible: surely it should be shorter as you only started looking at the clock some way into the second. It’s because we add the time it takes our eyes to reach the clock to the time we think we’ve been looking at it. Our brains construct the illusion of continuous experience by filling in the gap of the saccade with what we see after it has finished, so this phenomenon seems to last a little longer than it really does.

Experiments using eye-tracking software that starts timing when the eyes begin to move show that people think they have been looking at the clock a few milliseconds before the saccade starts. However the illusion does not work if the clock is moving relative to the person, either if the eyes are fixated or in a saccade, as the brain does not know what to fill the gap in with. In these cases, people accurately judge how long they have been looking at the clock.

But why can’t we read the clock face unless we look directly at it? Why do we have to have things in the centre of our vision in order to read them? The answer goes back to the two types of light sensitive cells in the eyes, the rods and cones. Most cone cells are found directly behind the pupil, in a region of the retina called the fovea. They’re densely packed, which means the sight can resolve small details, which is needed to read. The number of cones drops off steeply away from this spot, to be replaced with rod cells. Rod cells are more sensitive to low levels of light, but respond to light slightly slower than cones. They are more loosely packed and generally several rod cells will send information to the same neuron, so the signal from these cells is much less precise than the signals from cones. This means it is increasingly difficult to make out detail away from what we’re looking at. Interestingly, it also means that we can’t make out the colour of things in the corner of our eyes. The perception of colour outside the centre of our vision is largely reconstructed from memory, as all rods are only sensitive to the same part of the spectrum.

Cones come in three types that are most sensitive to short, long or medium wavelengths of light, and are therefore known as S-L-or M-cones. By comparing how the three types of cones are activated right next to each other, the eye can make out colour in the fovea. For unknown reasons there are considerably fewer S-cones (blue-sensing) than L-or M-cones, and they are also more spread out throughout the eye. This means that people are less capable to work out fine detail in blue, but that blue colour can be sensed further away from the centre of vision. You may also notice that the S-cone sensitivity on the graph is cut off around 400 nanometers, which is the range of visible light. This is not because it isn’t sensitive to ultraviolet light–in fact the two other types of cones should show a small peak there too–but sensitivity there doesn’t matter as the lens in the eye absorbs the ultraviolet light. People who have had their lenses removed

for medical reasons can see 300-400 nanometers of light, which they report as whitish blue.

Because the eye works with three colour-specific cones, we can’t necessarily tell the difference between green light and a combination of yellow and blue light that stimulates the cones in the same ratio. Most colour technology relies on this fact– computer screens and printers can only produce three different colours and make up a rainbow by varying the relative amounts of them. However we also need a way of reporting combinations of light that don’t correspond to anything on the rainbow, such as red and purple light together. This is how we make pink light. Pink, like white and black, isn’t a pure colour found in the rainbow–it’s one of the few signs our eyes give us that most of what we see isn’t just one wavelength of light.

Our brains usually give the impression that our eyes see exactly what’s going on in front of us all the time. In reality, a lot of perception is done in the brain, processing the information from the eyes and combining it with previous information, filling in the many blanks with assumptions. Normally the assumptions are good, so good that we don’t have to notice them. But knowing they are there, how amazing it is that we can make sense of the world at all.

Robin Lamboll is a 1st year PhD student at the Department of Physics